Abstract

We propose a robust and reliable evaluation metric for generative models by introducing topological and statistical treatments for rigorous support estimation.

Existing metrics, such as Inception Score (IS), Frechet Inception Distance (FID), and the variants of Precision and Recall (P&R), heavily rely on supports that are estimated from sample features. However, the reliability of their estimation has not been seriously discussed (and overlooked) even though the quality of the evaluation entirely depends on it.

In this paper, we propose Topological Precision and Recall (TopP&R, pronounced 'topper'), which provides a systematic approach to estimating supports, retaining only topologically and statistically important features with a certain level of confidence. This not only makes TopP&R strong for noisy features, but also provides statistical consistency. Our theoretical and experimental results show that TopP&R is robust to outliers and non-independent and identically distributed (Non-IID) perturbations, while accurately capturing the true trend of change in samples. To the best of our knowledge, this is the first evaluation metric focused on the robust estimation of the support and provides its statistical consistency under noise.

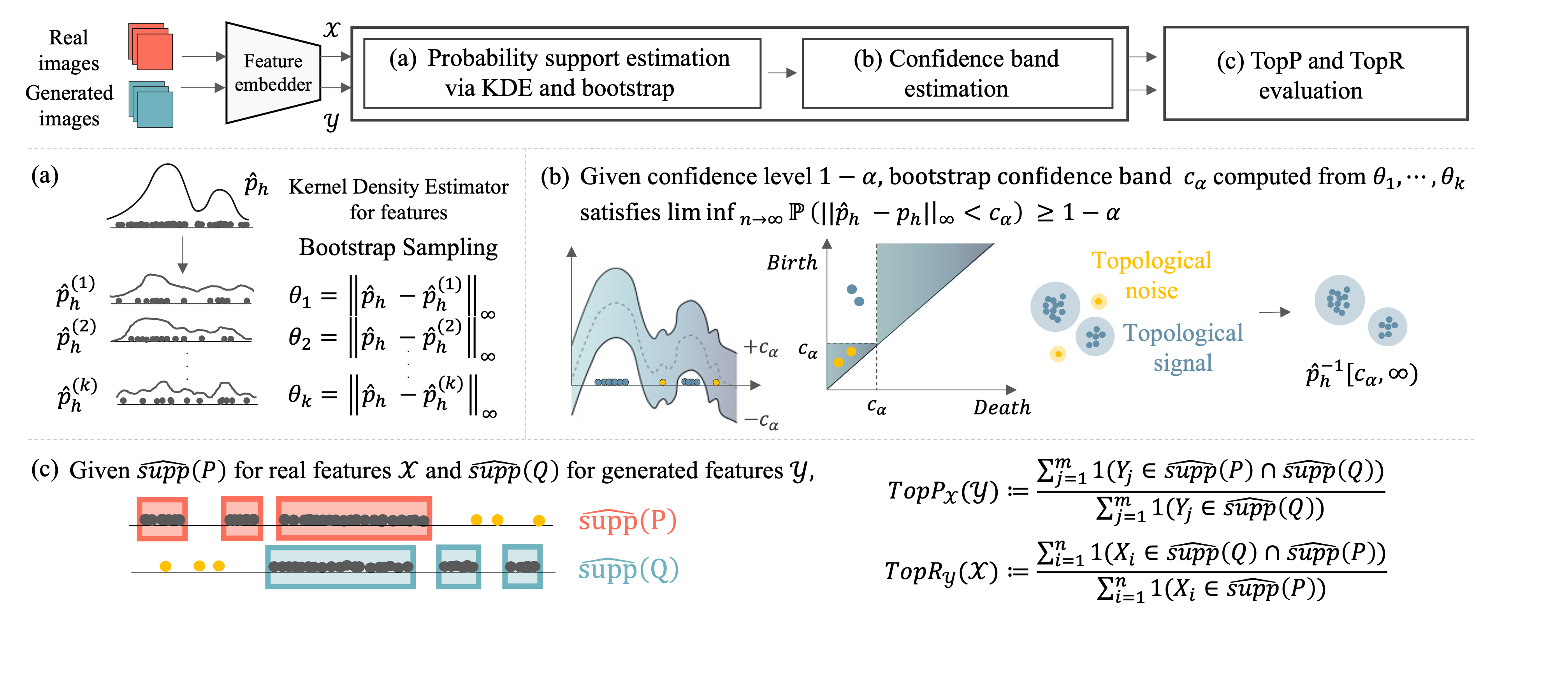

Overview

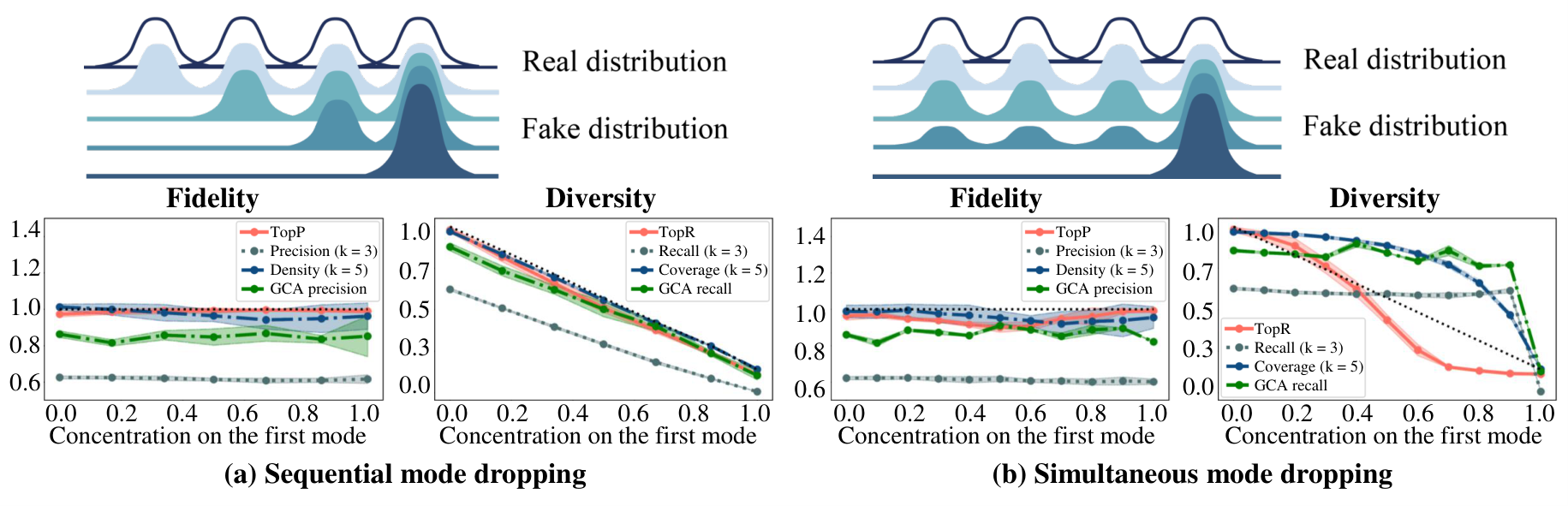

Experiments for mode dropping

Toy dataset case

We compare evaluation metrics for (a) sequential and (b) simultaneous mode-drop scenarios.

The horizontal axis shows the concentration ratio on the distribution centered at

.

We observe that the values of Precision fail to saturate,

, mainly smaller than 1, and the Density fluctuates to a value greater than 1, showing their instability and unboundedness.

Recall and GCA do not respond to the simultaneous mode drop, and Coverage decays slowly compared to the reference line. In contrast, TopP performs well, being held at the upper bound of 1 in sequential mode drop,

and TopR also decreases closest to the reference line in simultaneous mode drop.

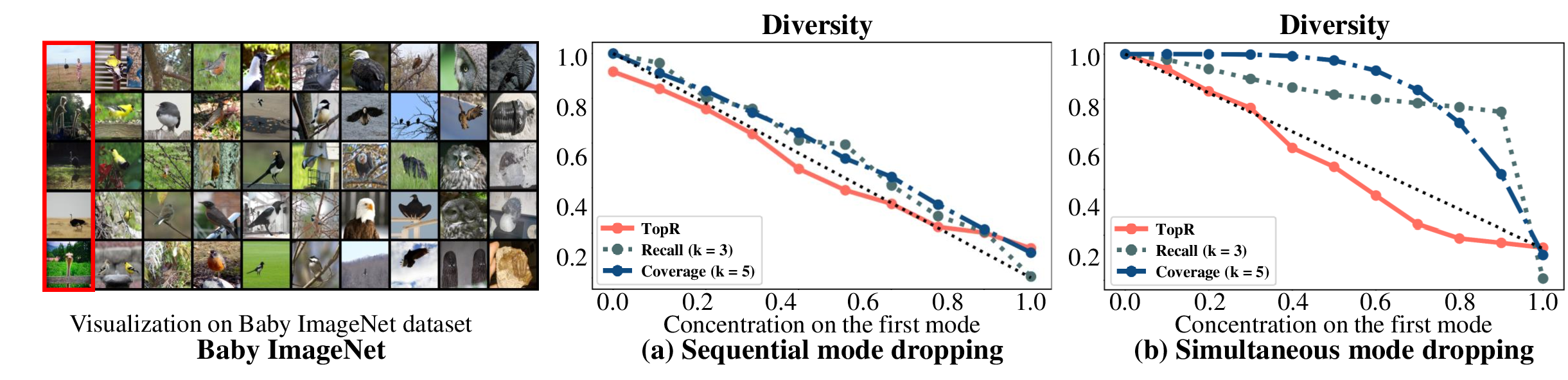

Real dataset case

We have conducted an additional experiment using Baby ImageNet to investigate the sensitivity of TopP&R to mode-drop in real-world data. Since our experiment involves gradually reducing the fixed number of fake samples until nine modes of the fake distribution vanish, the ground truth diversity should decrease linearly. From the experimental results, both D&C and P&R still struggle to respond to simultaneous mode dropping. In contrast, TopP&R consistently exhibit a high level of sensitivity to subtle distribution changes. This notable capability of TopP&R can be attributed to its direct approximation of the underlying distribution, distinguishing it from other metrics.

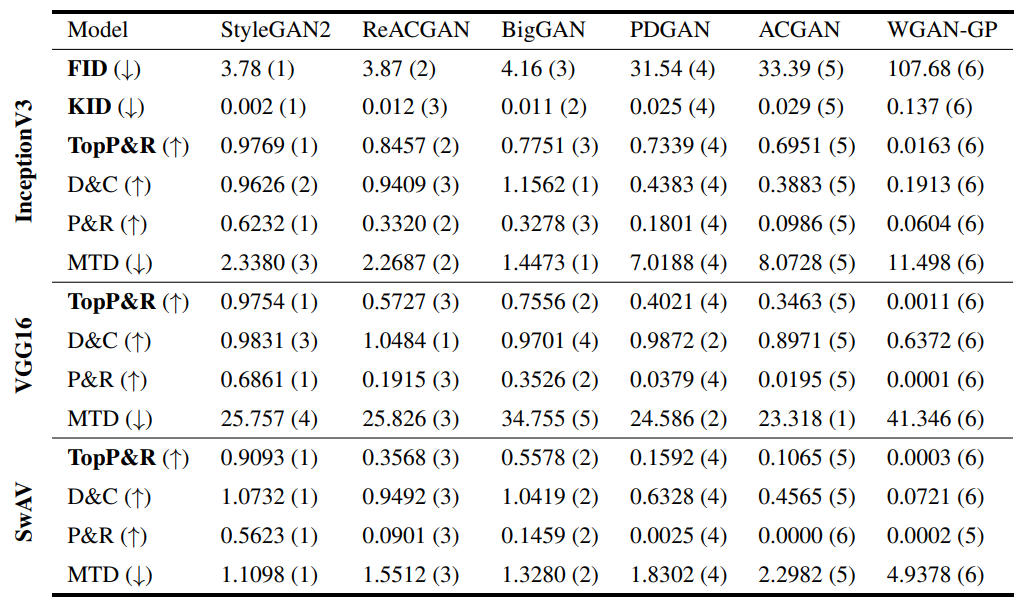

Experiment for ranking various generative models

Generative models trained on CIFAR-10 are ranked by FID, KID and MTD, and F1-scores

based on TopP&R, D&C and P&R, respectively. The

are embedded with InceptionV3, VGG16, and SwAV. The number inside the parenthesis

denotes the rank based on each metric.

While other metrics exhibit fluctuating rankings, TopP&R consistently provides the most stable and consistent results similar to both FID and KID.

To quantitatively compare the similarity of rankings across varying embedders by different metrics,

we have computed mean Hamming Distance (MHD) where lower value indicates more similarity.

TopP&R, P&R, D&C, and MTD have MHDs of 1.33, 2.66, 3.0, and 3.33, respectively.

References

- Tuomas Kynkäänniemi, Tero Karras, Samuli Laine, Jaakko Lehtinen, and Timo Aila. Improved precision and recall metric for assessing generative models. Advances in Neural Information Processing Systems, 32, 2019.

- Muhammad Ferjad Naeem, Seong Joon Oh, Youngjung Uh, Yunjey Choi, and Jaejun Yoo. Reliable fidelity and diversity metrics for generative models. In International Conference on Machine Learning, pages 7176–7185. PMLR, 2020.

- Minguk Kang, Joonghyuk Shin, and Jaesik Park. Studiogan: A taxonomy and benchmark of gans for image synthesis. arXiv preprint arXiv:2206.09479, 2022.

- Serguei Barannikov, Ilya Trofimov, Grigorii Sotnikov, Ekaterina Trimbach, Alexander Korotin, Alexander Filippov, and Evgeny Burnaev. Manifold topology divergence: a framework for comparing data manifolds. Advances in Neural Information Processing Systems, 34, 2021.

- Petra Poklukar, Anastasiia Varava, and Danica Kragic. Geomca: Geometric evaluation of data representations. In International Conference on Machine Learning, pages 8588–8598. PMLR, 2021.

- Martin Heusel, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. Gans trained by a two time-scale update rule converge to a local nash equilibrium. Advances in neural information processing systems, 30, 2017.